Highlights

-

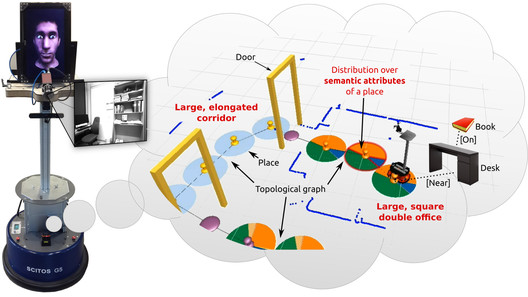

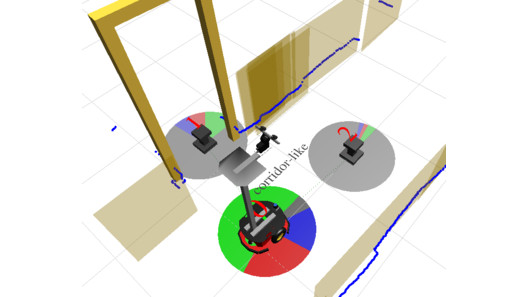

Conceptual visualization of the semantic map generated by the algorithmConceptual visualization of the semantic map generated by the algorithm

Conceptual visualization of the semantic map generated by the algorithmConceptual visualization of the semantic map generated by the algorithm -

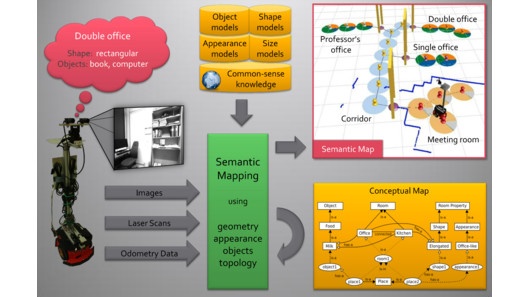

Overview of the inputs, outputs and knowledge maintained by the algorithmOverview of the inputs, outputs and knowledge maintained by the algorithm

Overview of the inputs, outputs and knowledge maintained by the algorithmOverview of the inputs, outputs and knowledge maintained by the algorithm -

Video illustrating real-time semantic mapping of an office environment (Short)Video illustrating real-time semantic mapping of an office environment (Short)

Video illustrating real-time semantic mapping of an office environment (Short)Video illustrating real-time semantic mapping of an office environment (Short) -

Video illustrating real-time semantic mapping of an office environment (Full)Video illustrating real-time semantic mapping of an office environment (Full)

Video illustrating real-time semantic mapping of an office environment (Full)Video illustrating real-time semantic mapping of an office environment (Full) -

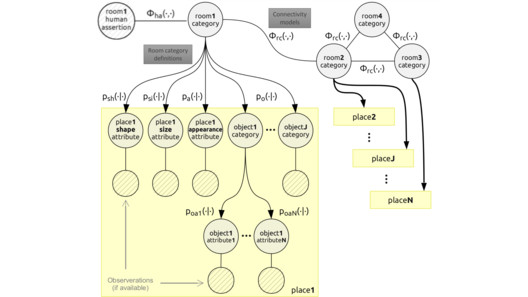

Probabilistic chain graph model of the conceptual mapProbabilistic chain graph model of the conceptual map

Probabilistic chain graph model of the conceptual mapProbabilistic chain graph model of the conceptual map -

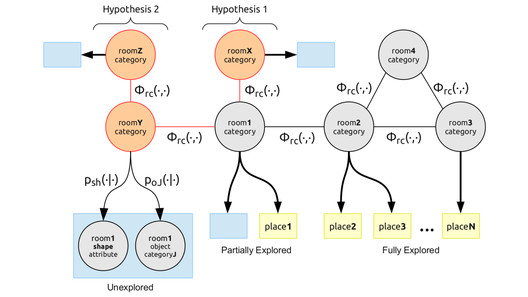

Unexplored space modeled using the chain graphUnexplored space modeled using the chain graph

Unexplored space modeled using the chain graphUnexplored space modeled using the chain graph -

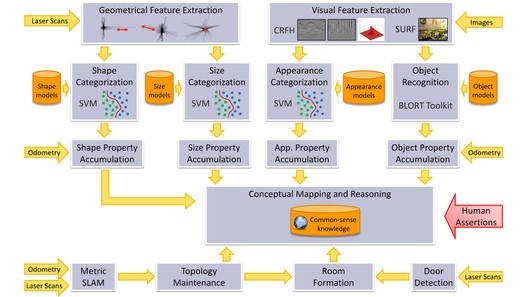

Structure of the system and data flow between its main componentsStructure of the system and data flow between its main components

Structure of the system and data flow between its main componentsStructure of the system and data flow between its main components -

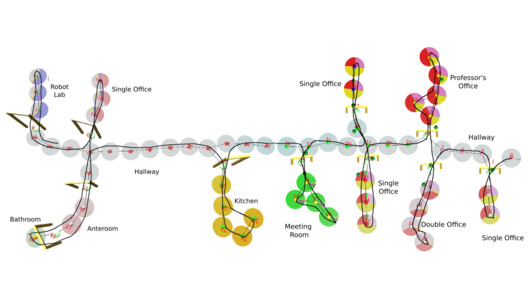

Visualization of beliefs about rooom categories on a topological mapVisualization of beliefs about rooom categories on a topological map

Visualization of beliefs about rooom categories on a topological mapVisualization of beliefs about rooom categories on a topological map -

Predictions of existance of rooms of certain categories in unexplored spacePredictions of existance of rooms of certain categories in unexplored space

Predictions of existance of rooms of certain categories in unexplored spacePredictions of existance of rooms of certain categories in unexplored space -

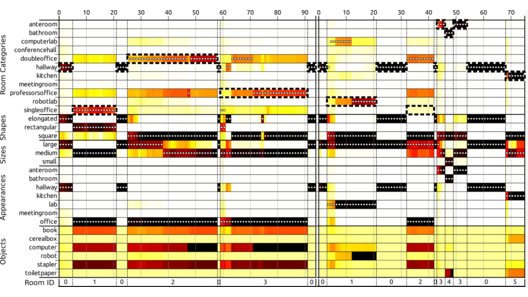

Beliefs about semantic attributes of spatial entities during mappingBeliefs about semantic attributes of spatial entities during mapping

Beliefs about semantic attributes of spatial entities during mappingBeliefs about semantic attributes of spatial entities during mapping