Deep Generative Spatial Models for Mobile Robots

About

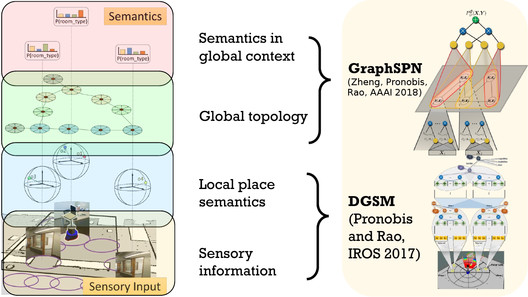

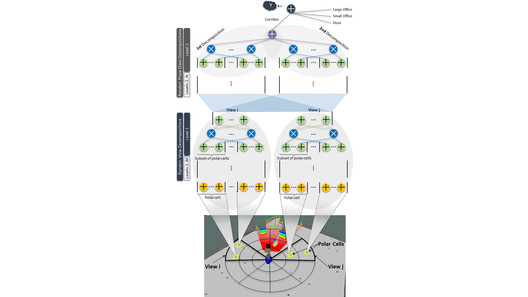

In this project, we developed a new probabilistic framework that allows mobile robots to autonomously learn deep, generative models of their environments that span multiple levels of abstraction. Unlike traditional approaches that combine engineered models for low-level features, geometry, and semantics, our approach leverages recent advances in Sum-Product Networks (SPNs) and deep learning to learn a single, universal model of the robot's spatial environment. Our model is fully probabilistic and generative, and represents a joint distribution over spatial information ranging from low-level geometry to semantic interpretations.

The model is capable of solving a wide range of tasks: from semantic classification of places, uncertainty estimation, and novelty detection, to generation of place appearances based on semantic information and prediction of missing data in partial observations.

Experiments on laser-range data from a mobile robot show that the proposed universal model obtains performance superior to state-of-the-art models fine-tuned to one specific task, such as Generative Adversarial Networks (GANs) or SVMs.

Highlights

Related Publications

- NEW! Learning Graph-Structured Sum-Product Networks for Probabilistic Semantic Maps

, In: AAAI Conference on Artificial Intelligence (AAAI), 2018. Acceptance rate 24.6%, oral presentation. - NEW! Learning Deep Generative Spatial Models for Mobile Robots

, In: International Conference on Intelligent Robots and Systems (IROS), 2017. - NEW! LibSPN: A Library for Learning and Inference with Sum-Product Networks and TensorFlow

, In: ICML 2017 Workshop on Principled Approaches to Deep Learning, 2017. - NEW! Deep Spatial Affordance Hierarchy: Spatial Knowledge Representation for Planning in Large-scale Environments

, In: RSS 2017 Workshop on Spatial-Semantic Representations in Robotics, 2017. - NEW! Learning Semantic Maps with Topological Spatial Relations Using Graph-Structured Sum-Product Networks

, In: IROS 2017 Workshop on Machine Learning Methods for High-Level Cognitive Capabilities in Robotics, 2017. - NEW! Learning Deep Generative Spatial Models for Mobile Robots

, In: RSS 2017 Workshop on Spatial-Semantic Representations in Robotics, 2017.